Reverse Engineering ChatGPT Plugins with LangChain

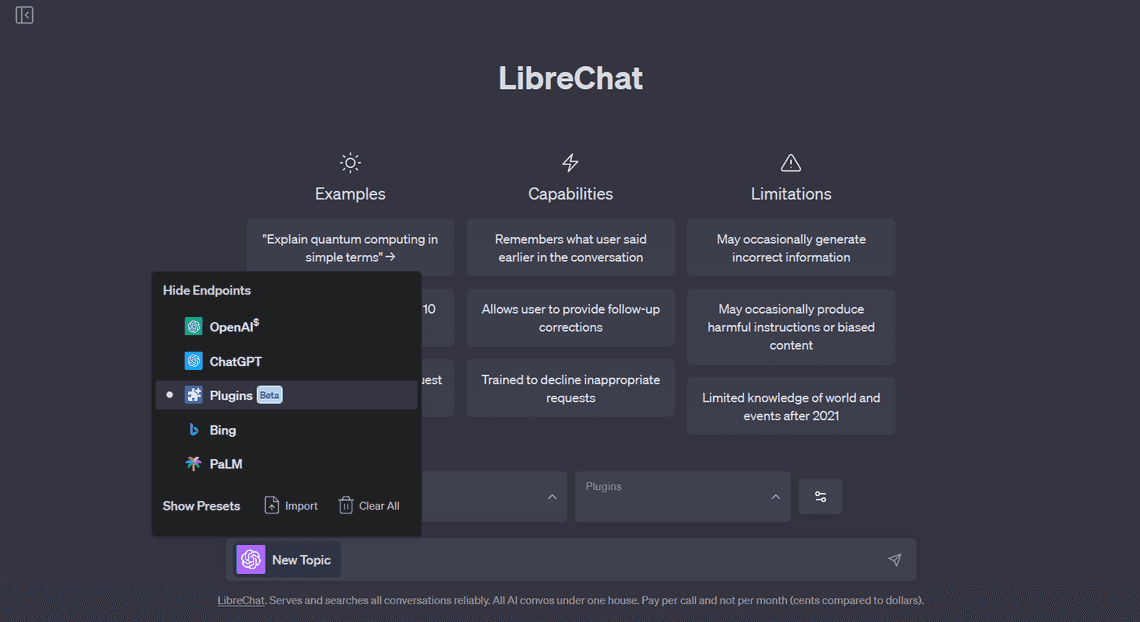

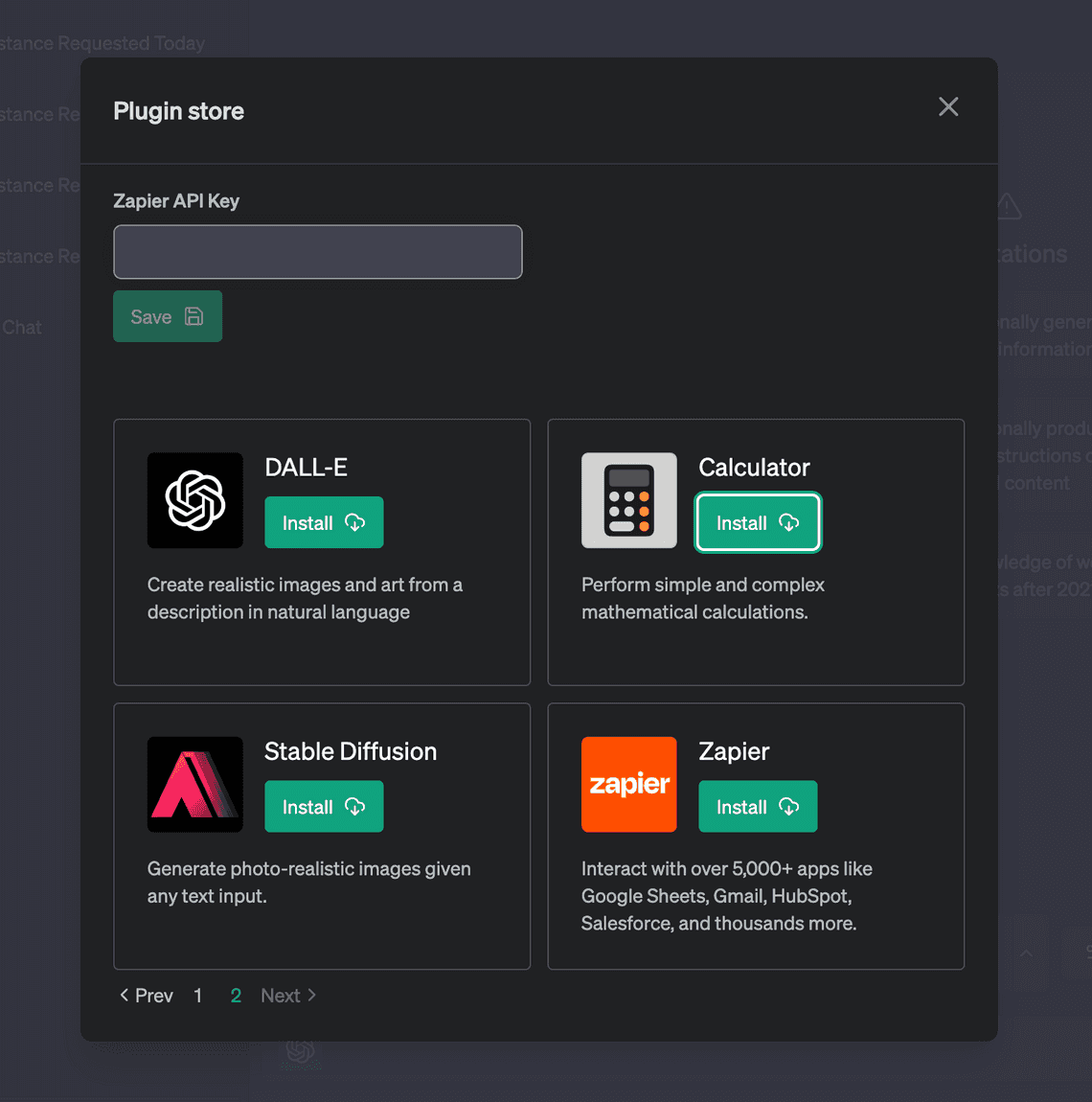

LibreChat is a clone of the original ChatGPT that aims to integrate all AI conversations under one roof with extended features, while celebrating the user experience of ChatGPT. This project supports AI model selection from several sources, including OpenAI API, BingAI, Palm2, and ChatGPT Browser. It provides features like response streaming identical to ChatGPT, editing and resubmitting messages just like the official site (with conversation branching), and the ability to customize the internal settings of the AI models. You can then create, save, and share custom prompts and presets for ChatGPT, Bing, and Palm2. There are a number of features that are offered by LibreChat which you can’t find with ChatGPT, but above all, we are excited to announce the release of LibreChat Plugins with the release of version 0.5.0.

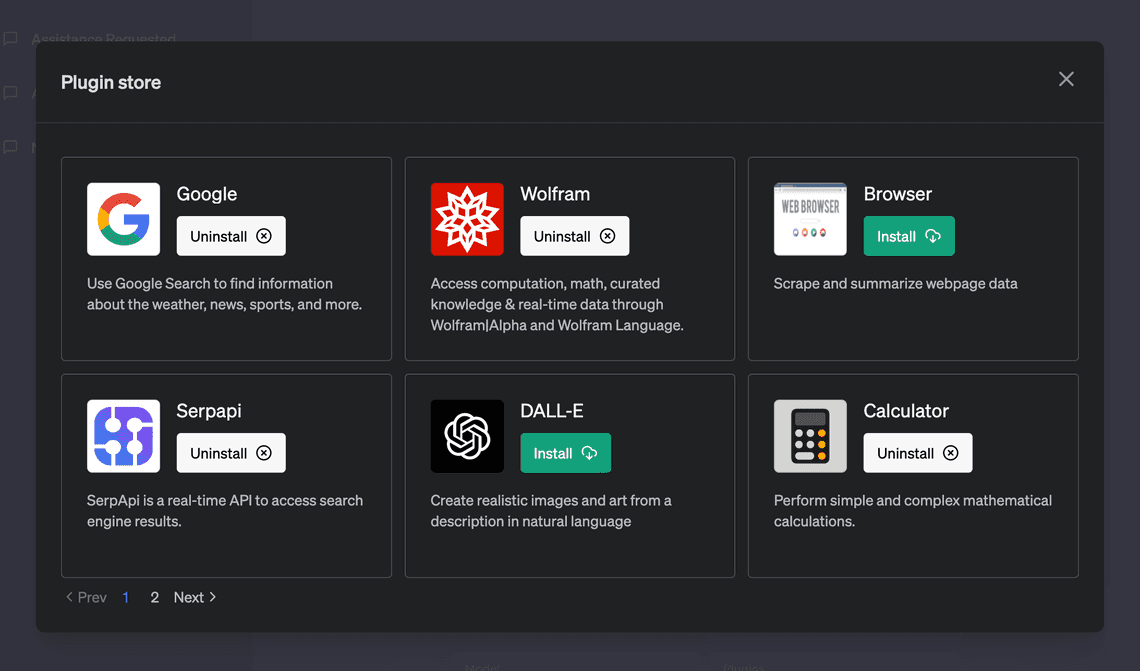

What are LibreChat Plugins?

LibreChat plugins mimic the functionality of ChatGPT plugins, and while ChatGPT plugins are currently only available to ChatGPT Plus subscribers, LibreChat plugins can be used now for free. Being that LibreChat is an open source project, it opens up the doors for developers to use and extend the functionality of ChatGPT on a local environment or internal company network for example, where all of your data can exist where you want it. With this first iteration, the plugins system was written in JavaScript using the [LangChain JavaScript library] since LibreChat is already a JavaScript application. However, being that Python’s LangChain library is cosiderably farther along than it’s JavaScript counterpart, we are already working on porting the LibreChat Plugins system to Python for the next release. This will allow us to take advantage of other ‘agentic’ libraries, like HuggingFace Transformers Agent, and Microsoft Guidance.

LibreChat Plugins vs. ChatGPT

One thing that makes LibreChat plugins unique is that you can do switching of multiple plugins in one conversation. this is something that ChatGPT can’t do. With ChatGPT, once you select a set of plugins, you are stuck with them for the conversation. With LibreChat plugins, you can switch between plugins at any time in the same conversation. ChatGPT plugins use OpenAI specs, which are essentially just information for the AI to know how to interact with an API. In other words, they are not actual langchain tools, but rather just a set of API endpoints along with instructions for the AI about when and how the plugin should be used. This is actually a disadvantage of ChatGPT plugins, mainly because you don’t have the level of control and flexibility that you get with langchain and LibreChat plugins.

Another thing that makes LibreChat plugins unique is that they are not tied to a specific language model. You can use LibreChat plugins with any language model that is supported by LangChain. This means that you can use LibreChat plugins with ChatGPT, BingAI, Palm2, and any other language model that is supported by LangChain.

For the purposes of this article, I will first focus on the conceptual elements of the LLM plugins architecture such as Agents and Tools, then I will provide some detailed information on how to create your own plugins for LibreChat.

Limitations of Large Language Models and the Power of Plugins

Large language models like ChatGPT, BingAI, and Palm2, while powerful, have their limitations. They can generate coherent and contextually relevant responses, but they lack the ability to interact with the environment or access external data sources. This is where LangChain comes in. By leveraging agents and tools, these limitations can be circumvented.

LangChain allows for the creation of plugins that can enhance the capabilities of these language models. These plugins can enable the language models to interact with APIs, access up-to-date information, and take the appropriate actions to complete tasks.

For example, I could use OpenWeatherMap to have ChatGPT to get this weekend’s weather for my location and if its going to be sunny, book a reservation at my favorite outdoor restaurant using OpenTable. Or perhaps I need some good ideas for dinner tomorrow. I could have the AI model find me the 5 most popular delicious salmon recipes, interact with the LLM to customize it, then have it add all of the ingredients to my InstaCart shopping cart. Using the Zapier plugin, I can connect the LLM to say, Trello and Slack, and tell it to create a new work item in the issues column of a Trello board, assign it to Zach, then send Zach a message with the link to let him know.

AI can be used for more than just the obvious things, making the possibilities truly endless. OpenAI asserts that “The expansion of use cases made feasible by plugins presents novel approaches to existing problems with large language models.

How Plugins Work

Plugins are essentially a collection of tools that can be used to extend the capabilities of a language model. They are designed to be modular and reusable, and can be used to accomplish a wide variety of tasks. To understand how plugins work, one must understand the concepts of agents and tools in the context of an LLM Chain.

LangChain is a framework designed for developing applications powered by language models. It is built around two key principles: being data-aware and agentic. Being data-aware means that the language model can connect to other sources of data, while being agentic allows the language model to interact with its environment and make appropriate decisions.

We are using LangChain as the framework for LibreChat plugins, but the same or (at least) similar concepts hold true for other frameworks like HuggingFace’s Transformers Agent, and Microsoft Guidance.

LangChain provides modular abstractions for the components necessary to work with language models. These components can be thought of as the tools that the agents use to perform their tasks. They include Models, Prompts, Memory, Indexes, Chains, Agents, Tools, and Callbacks.

Models and Prompts deal with the language model itself and the input it receives. Memory refers to state that is persisted between calls of a chain/agent, while Indexes allow language models to interact with application-specific data. Chains are structured sequences of calls to an LLM or to a different utility, and Callbacks allow for logging and streaming the intermediate steps of any chain. At the heart of the plugin architecture are Agents and Tools. Agents are the decision makers, while Tools are the functions that perform specific tasks.

Agents: The Decision Makers

An agent is a Chain in which a language model, given a high-level directive and a set of tools, repeatedly decides an action, executes the action, and observes the outcome until the high-level directive is complete. This could be a chatbot that’s trained to understand and respond to user queries, or a more complex system that interacts with other APIs or databases.

Agents are truly a superpower of LangChain. They can be used to automate workflows, perform research, and create new and original content.

There are several types of agents:

Zero-shot-react: Determines which tool to use based solely on the tool’s description.

React-docstore: Utilizes Search and Lookup tools to interact with a docstore.

Self-ask-with-search: Looks up factual answers to questions using search.

Conversational-react-description: Assists in conversations, and the prompt is intended to facilitate a friendly and helpful dialogue. The ReAct framework is utilized to determine the appropriate approach, and past interactions are stored in memory for reference.

In the context of LibreChat, we use a custom agent that extends the LangChain ZeroShotAgent.

Tools: How Language Models Interact with Other Resources

A tool, on the other hand, is a function designed to perform a specific task. Examples of tools can range from Google Search, database lookups, Python REPL, to other chains.

The power of LangChain lies in the ability to chain together these tools to complete complex tasks. For instance, an agent can use a tool to search the web or a database for relevant information on a topic, use the results to make a decision, and then use another tool to execute an action based on that decision.

Additionally, toolkits are groups of tools to solve a particular problema long with an agent, of which LangChain has several built-in. Examples include the Gmail Toolkit for writing emails on your behalf (don’t worry, it won’t send it for you unless you tell it to), the Playwright toolkit for using the browser to interact with dyanmically rendered sites, and a Jira toolkit, which is a wrapper around the atlassian-python-api library, and can be used to search, create and manage Jira tickets.

To see how tools are implemented with LibreChat plugins, take a look at the tools folder of the repository. Notice how the tool description plays a critical role in the agent’s decision making process. For example, for the WolframAlpha tool, the following description is used:

`Access computation, math, curated knowledge & real-time data through wolframAlpha. - Understands natural language queries about entities in chemistry, physics, geography, history, art, astronomy, and more. - Performs mathematical calculations, date and unit conversions, formula solving, etc. General guidelines: - Make natural-language queries in English; translate non-English queries before sending, then respond in the original language. - Inform users if information is not from wolfram. - ALWAYS use this exponent notation: "6*10^14", NEVER "6e14". - Your input must ONLY be a single-line string. - ALWAYS use proper Markdown formatting for all math, scientific, and chemical formulas, symbols, etc.: '$$\n[expression]\n$$' for standalone cases and '\( [expression] \)' when inline. - Format inline wolfram Language code with Markdown code formatting. - Convert inputs to simplified keyword queries whenever possible (e.g. convert "how many people live in France" to "France population"). - Use ONLY single-letter variable names, with or without integer subscript (e.g., n, n1, n_1). - Use named physical constants (e.g., 'speed of light') without numerical substitution. - Include a space between compound units (e.g., "Ω m" for "ohm*meter"). - To solve for a variable in an equation with units, consider solving a corresponding equation without units; exclude counting units (e.g., books), include genuine units (e.g., kg). - If data for multiple properties is needed, make separate calls for each property. - If wolfram provides multiple 'Assumptions' for a query, choose the more relevant one(s) without explaining the initial result. If you are unsure, ask the user to choose. - Performs complex calculations, data analysis, plotting, data import, and information retrieval.`;

This description is used by the agent to determine whether or not to use the tool. If the agent determines that the tool is appropriate, it will then use the tool to perform the task.

How to Build Your Own Custom LibreChat Plugin

The key to creating a custom plugin is to extend the Tool class and implement the _call method. The _call method is where you define what your plugin does. You can also define helper methods and properties in your class to support the functionality of your plugin.

The process of creating a custom plugin can be broken down into the following six steps:

Step 1: Import Required Modules

Import the necessary modules for your plugin, including Tool from langchain/tools and any other modules your plugin might need.

const { Tool } = require('langchain/tools');

const axios = require('axios');

Step 2: Define Your Plugin Class

Define a class for your plugin that extends Tool. Set the name and description properties in the constructor. If your plugin requires credentials or other variables, set them from the fields parameter or from a method that retrieves them from the process environment.

class WolframAlphaAPI extends Tool {

constructor(fields) {

super();

this.name = 'wolfram';

this.apiKey = fields.WOLFRAM_APP_ID || this.getAppId();

this.description = `Access computation, math, curated knowledge & real-time data...`;

}

...

}

Notice that the Wolfram API key is retrieved from the fields parameter, which gets passed in from the agent. This allows for the api key to be provided by the user from the UI when the plugin is installed.

If the key is not found, the getAppId method is called to retrieve it from the process environment.

getAppId() {

const appId = process.env.WOLFRAM_APP_ID;

if (!appId) {

throw new Error('Missing WOLFRAM_APP_ID environment variable.');

}

return appId;

}

Step 3: Define Helper Methods

Define helper methods within your class to handle specific tasks if needed.

class WolframAlphaAPI extends Tool {

...

async fetchRawText(url) {

try {

const response = await axios.get(url, { responseType: 'text' });

return response.data;

} catch (error) {

console.error(`Error fetching raw text: ${error}`);

throw error;

}

}

createWolframAlphaURL(query) {

// Clean up query

const formattedQuery = query.replaceAll(/`/g, '').replaceAll(/\n/g, ' ');

const baseURL = 'https://www.wolframalpha.com/api/v1/llm-api';

const encodedQuery = encodeURIComponent(formattedQuery);

const appId = this.apiKey || this.getAppId();

const url = `${baseURL}?input=${encodedQuery}&appid=${appId}`;

return url;

}

...

}

Step 4: Implement the _call Method

Implement the _call method where the main functionality of your plugin is defined. This method is called when the language model decides to use your plugin. It should take an input parameter and return a result. If an error occurs, the function should return a string representing an error, rather than throwing an error.

class WolframAlphaAPI extends Tool {

...

async _call(input) {

try {

const url = this.createWolframAlphaURL(input);

const response = await this.fetchRawText(url);

return response;

} catch (error) {

if (error.response && error.response.data) {

console.log('Error data:', error.response.data);

return error.response.data;

} else {

console.log(`Error querying Wolfram Alpha`, error.message);

// throw error;

return 'There was an error querying Wolfram Alpha.';

}

}

}

}

The

_callfunction is what gets called by the agent. When an error occurs, a string representing the error should be returned. This allows for the error to be passed to the LLM so it can decide how to handle it.

Step 5: Export Your Plugin and Import into handleTools.js

Export your plugin and import it into handleTools.js.

tools/wolfram.js:

module.exports = WolframAlphaAPI;

api\app\langchain\tools\handleTools.js:

const WolframAlphaAPI = require('./wolfram');

Next, in handleTools.js, find the loadTools function and add your plugin to the toolConstructors object.

const loadTools = async ({ user, model, tools = [], options = {} }) => {

const toolConstructors = {

wolfram: WolframAlphaAPI,

calculator: Calculator,

google: GoogleSearchAPI,

dall-e: OpenAICreateImage,

stable-diffusion: StableDiffusionAPI

};

...

}

Most custom plugins will require more advanced initialization, which can be done in the customConstructors object. The following example shows how the browser, serpapi, and zapier plugins are initialized:

const customConstructors = {

browser: async () => {

let openAIApiKey = process.env.OPENAI_API_KEY;

if (!openAIApiKey) {

openAIApiKey = await getUserPluginAuthValue(user, 'OPENAI_API_KEY');

}

return new WebBrowser({ model, embeddings: new OpenAIEmbeddings({ openAIApiKey }) });

},

serpapi: async () => {

let apiKey = process.env.SERPAPI_API_KEY;

if (!apiKey) {

apiKey = await getUserPluginAuthValue(user, 'SERPAPI_API_KEY');

}

return new SerpAPI(apiKey, {

location: 'Austin,Texas,United States',

hl: 'en',

gl: 'us'

});

},

zapier: async () => {

let apiKey = process.env.ZAPIER_NLA_API_KEY;

if (!apiKey) {

apiKey = await getUserPluginAuthValue(user, 'ZAPIER_NLA_API_KEY');

}

const zapier = new ZapierNLAWrapper({ apiKey });

return ZapierToolKit.fromZapierNLAWrapper(zapier);

},

plugins: async () => {

return [

new HttpRequestTool(),

await AIPluginTool.fromPluginUrl(

"https://www.klarna.com/.well-known/ai-plugin.json", new ChatOpenAI({ openAIApiKey: options.openAIApiKey, temperature: 0 })

),

]

}

};

Step 6: Add Your Plugin to manifest.json

Add your plugin to manifest.json, located in the api/app/langchain/tools directory. Follow the strict format for each of the fields of the plugin object. If your plugin requires authentication, add those details under authConfig as an array. The pluginKey should match the class name of class that extends Tool. Also note that the authField prop must match the process.env variable name.

{

"name": "Wolfram",

"pluginKey": "wolfram",

"description": "Access computation, math, curated knowledge & real-time data through Wolfram|Alpha and Wolfram Language.",

"icon": "https://www.wolframcdn.com/images/icons/Wolfram.png",

"authConfig": [

{

"authField": "WOLFRAM_APP_ID",

"label": "Wolfram App ID",

"description": "An AppID must be supplied in all calls to the Wolfram|Alpha API. You can get one by registering at <a href='http://products.wolframalpha.com/api/'>Wolfram|Alpha</a> and going to the <a href='https://developer.wolframalpha.com/portal/myapps/'>Developer Portal</a>."

}

]

},

And that’s all there is to it! You’ve just created your first LibreChat plugin. Your plugin should now appear in the plugin store and be available for use in LibreChat.

Conclusion

The introduction of LibreChat and its plugins represents a significant advancement for giving people the ability to run their own ChatGPT-like app locally or in the cloud. By leveraging the LangChain framework, LibreChat allows users to extend the functionality of these models, enabling them to interact with external data sources, APIs, and even perform complex tasks. The power of LibreChat plugins lies in the synergy between agents and tools, where agents make decisions and tools perform specific tasks.

With LibreChat plugins, users can automate workflows, access up-to-date information, and create new and original content. Additionally, this open-source project opens the doors for developers to utilize and enhance the functionality of ChatGPT in various environments, including local setups and internal company networks. As LibreChat continues to evolve, with plans to port the plugins system to Python and integrate other agentic libraries like HuggingFace Transformers and Microsoft Guidance, the possibilities for novel applications of large language models are truly endless.